What Are The Bots? In contrast to assembled by robotics for battles, industrial plant use, or an internet bot is simply easy traces of code with a Database.

An online or web bot is simply a pc program that runs on the web. Usually, they’re programmed to do sure duties like crawling, chatting with customers, and so forth., quicker than people can do.

Search bots like crawlers, spiders, or wanderers, are the pc packages utilized by search engines like google, like Google, Yahoo, Microsoft Bing, Baidu, Yandex, to construct their database.

Bots can find completely different net pages of the positioning by the hyperlink. Then, they obtain and index the content material from the web sites; the aim is to study what each net web page is about; that is referred to as crawling; it robotically accesses the web sites and obtains that knowledge.

Are Bots dangerous to your web site?

Inexperienced persons would possibly get confused regarding bots; are they good for the web site or not? A number of good bots, equivalent to search engines like google, Copywrite, Web site monitoring, and so forth., are essential for the web site.

Search Engine:

Crawling the positioning can assist search engines like google provide ample data in response to customers’ search queries. It generates the listing of applicable net content material that exhibits up as soon as any person searches into the major search engines like google, bing, and so forth.; because of this, your web site will get extra site visitors.

Copyright:

Copyright bots verify the content material of the web sites in the event that they violate copyright legislation, they’ll personal by the corporate or an individual who owns the copyright content material. For instance, such bots can verify for the textual content, music, movies, and so forth., over the web.

Monitoring:

Monitoring bots monitor the web site’s backlinks, system outages and provides alerts of the downtime or main modifications.

Above, we have now discovered sufficient in regards to the good bots, now let’s speak about their malicious use.

One of many exploiting use of bots is content material scraping. Bots typically steal helpful content material with out the creator’s consent and retailer the content material of their database on the internet.

It may be used as spambots, and verify the net pages and call type to get the E mail deal with that will use to ship the spam and simple to compromise.

Final however not the least, hackers can use bots for hacking functions. Usually, hackers use instruments to scan web sites for vulnerabilities. Nonetheless, the software program bot can even scan the web site over the web.

As soon as the bot reaches the server, it discovers and stories the vulnerabilities that facilitate hackers to reap the benefits of the server or web site.

Whether or not the bots are good or used maliciously, it’s all the time higher to handle or cease them from accessing your web site.

For instance, crawling the positioning by a search engine is best for website positioning; however, in the event that they request to entry the positioning or net pages in a fraction of seconds, it might overload the server by growing the utilization of the server assets.

The best way to management or cease bots utilizing robotic.txt?

What’s robotic.txt?

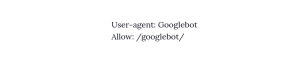

Robotic.txt file comprises the algorithm that manages them to entry your web site. This file lives on the server and specifies the fule for any bots whereas accessing the positioning. As well as, these guidelines outline which web page to crawl, which hyperlink to comply with, and different habits.

For instance, when you don’t need some net pages of your web site to point out up in googles search outcomes, you’ll be able to add the foundations for a similar within the robotic.txt file, then Google won’t present these pages.

Good bots will certainly comply with these guidelines. However, they can’t be pressured to comply with the foundations; it requires a extra lively strategy; crawl fee, allowlist, blocklist, and so forth.

crawl fee:

The crawl fee defines what number of requests any bots could make per second whereas crawling the positioning.

If the bot request to entry the positioning or net pages in a fraction of seconds, it might overload the server by growing the utilization of server assets.

Notice: All the major search engines might not assist setting the crawl fee.

Allowlist

For instance, you will have organized an occasion and invited some visitors. If anybody tries to enter an occasion that isn’t in your visitor listing, safety personnel will stop him, however anybody on the listing can enter freely; this defines how net bot administration works.

Any net bot in your permit listing can simply entry your web site; to do the identical, you must outline “person agent,” the “IP deal with,” or a mixture of those two within the robotic.txt file.

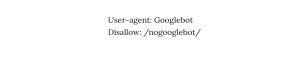

Blocklist

Whereas permit listing permits solely specified bots to entry the positioning, the blocklist is barely completely different. Blocklist blocks solely specified bots whereas others can entry the URLs.

For instance: To Disallow the crawling of all the web site.

Block URLs.

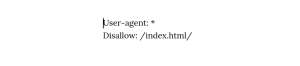

To dam a URL from crawling, you’ll be able to outline easy guidelines within the robotic.txt file.

For instance: Within the user-agent line, you’ll be able to outline a selected bot or asterisk signal to dam all of them for that particular URL.

(It’ll block all of the robots from accessing index.html. You’ll be able to outline any listing as a substitute of index.html.)

(Visited 101 instances, 1 visits right now)