We regularly discover a seemingly sudden leap in utilization and associated expertise developments just because what was as soon as unfeasible is now sensible. The rise in Huge Knowledge purposes follows intently behind the unfold of cloud computing. Let’s deal with what Huge Knowledge is, why it issues right this moment, and the way it has advanced in tandem with NoSQL databases. After we discuss Huge Knowledge, we’re coping with large portions of info that we will take a look at, or analyze, to search out one thing related.

Huge Knowledge usually has three traits every marked by the three Vs.

- Volume – We now have loads of information.

- Velocity – Our information is coming in quick.

- Variety – Our information is in many alternative kinds.

Let’s dive into how we get a lot information, forms of information, and the worth we will derive from it.

Drawing Conclusions

We’d like giant units of information to search out underlying patterns as a result of small units of information are unreliable in representing the actual world. Think about taking a survey of 10 individuals: eight of them have Android telephones, two have iPhones. With this small pattern dimension, you’d extrapolate that Apple solely has a 20% market share. This isn’t a very good illustration of the actual world.

It’s additionally vital to get info from a number of demographics and places. Surveying 10 individuals from Philadelphia, Pennsylvania doesn’t inform us a lot in regards to the world, america, and even the state of Pennsylvania as a complete. Briefly, getting good, dependable information requires loads of it. The broader the research, the extra we will break it down and draw conclusions.

Let’s up our survey from 10 to 100 and in addition document the age of the contributors. Now we’re accumulating extra information from a bigger pattern dimension. Now, let’s say the outcomes present that 40 individuals have Android telephones and 60 have iPhones. That is nonetheless a really small pattern however we will see {that a} 10x improve in contributors resulted in a big 80 level swing in our outcomes. However that’s solely contemplating one area of information from our set. Since we recorded our contributors’ age in addition to telephone selection, we would discover that teams aged 10-20 or 21-30 have a really totally different ratio.

It’s All In regards to the Algorithm

Huge Knowledge has us processing giant volumes of information coming in quick and in a wide range of codecs. From this information, we’re capable of finding underlying patterns that enable us to create correct fashions that mirror the actual world. Why does this matter? Correct fashions enable us to make predictions and develop or enhance algorithms.

The most typical instance of Huge Knowledge at work in our day by day lives is one thing easy and typically controversial – advice engines. “For those who like X, you’ll most likely like Y, too!” That is definitely helpful from a advertising and promoting perspective, however that is removed from the one use case. Huge Knowledge and algorithms energy every thing from self-driving vehicles to early illness detection.

In our quick instance of information assortment, we stopped at 100 individuals, however in case you actually need good information, you want 1000’s or tens of millions of sources with a magnitude of various attributes. This nonetheless wouldn’t really qualify as “Huge Knowledge,” even when we expanded the pattern dimension and arrange a speedy ingest of outcomes. We’d be lacking one of many three Vs, Selection, and that’s the place a bulk of our information comes from.

Knowledge Varieties

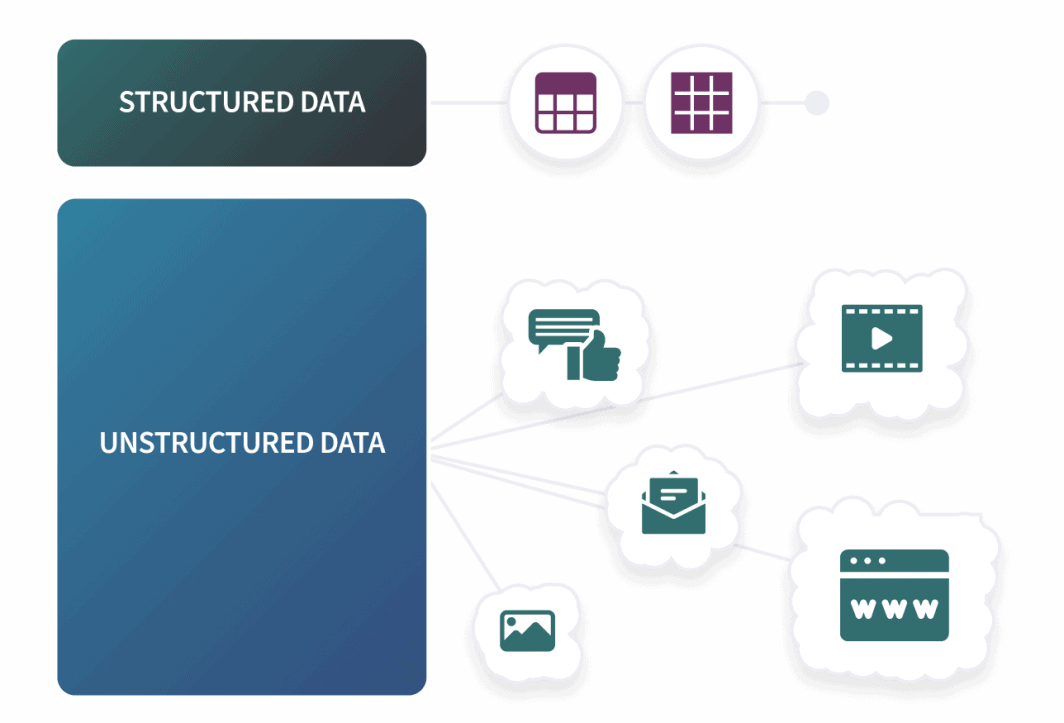

We will classify the kind of information we gather into three fundamental classes: Structured, Semi-Structured, and Unstructured. Structured information could be just like our survey above. We now have a predefined schema and our enter will match right into a inflexible construction. Any such information is ideal for RDBMSs utilizing SQL since they’re designed to work with rows and columns. Exterior of SQL databases, structured information usually consists of csv recordsdata and spreadsheets.

A overwhelming majority of the info that exists is coming from loads of totally different sources from our day after day actions in loads of other ways. Social media posts, buying historical past, looking and cookies: Each motion can construct a profile for a person with quite a few attributes similar to age, location, gender, marital standing, and past. We’re simply scratching the floor right here however we solely have to deal with the next: industries are accumulating loads of information to attract correct conclusions and a overwhelming majority of this information shouldn’t be in predefined, structured codecs. For Huge Knowledge, we’re normally working with Semi-Structured and Unstructured types of information.

Utility logs or emails are examples of semi-structured information. We name this semi-structured as a result of whereas it’s not in inflexible rows and columns, there’s a normal sample to how this information is formatted. Two of the commonest file forms of semi-structured information are JSON and XML. Unstructured information could be virtually something that isn’t structured or semi-structured, and as we will think about, this makes up a overwhelming majority of our information. Widespread examples of unstructured information embrace social media posts, audio and video recordsdata, photos, and different paperwork.

Our telephone selection survey nonetheless works as an analytical demonstration : The extra information we’ve, the extra precisely our conclusions will mirror the actual world, however to really get extra information we have to have a system able to ingesting extra than simply structured information. That is the place NoSQL databases enter the equation.

Huge Knowledge and NoSQL

The idea of massive information has been recognized for the reason that Nineteen Eighties, and like a lot of right this moment’s fastest-growing applied sciences, it took a significant step ahead within the mid 2000s. A milestone hit when Apache launched Hadoop in 2006. Hadoop is an open supply software program framework designed to reliably course of giant datasets.

A few of the core elements embrace HDFS (Hadoop Distributed File System) and YARN (Yet Another Resource Negotiator). HDFS is a quick and fault tolerant file system and YARN handles job scheduling and useful resource administration. Operating on high of HDFS usually is HBase, a column-oriented non-relational database. HBase matches the unfastened definition of NoSQL nevertheless it’s totally different sufficient from the opposite common databases that it gained’t usually seem on the identical lists as MongoDB or Cassandra (one other Apache venture).

HBase in tandem with HDFS can retailer large quantities of information in billions of rows and helps sparse information. Nevertheless, it’s not with out its limitations. HBase relies on HDFS, has steep {hardware} necessities, and lacks a local question language. Not like Mongo and Cassandra, HBase additionally depends on primary-replica structure that can lead to a single level of failure.

However proper from the start, we will see why Huge Knowledge and NoSQL are a match. Let’s run by means of the Vs once more.

- Velocity – NoSQL databases lack the consistency and validation of SQL databases, however once more the uncooked write pace we have to ingest loads of information, shortly.

- Variety – Huge Knowledge requires a system able to dealing with unstructured information and schemaless NoSQL databases like MongoDB are effectively fitted to the duty.

NoSQL databases are usually not solely used for Huge Knowledge, however we will see why they developed in lockstep with each other. There aren’t any indicators of a Huge Knowledge slowdown, and the NoSQL MongoDB, first launched in 2009, is likely one of the quickest rising databases available on the market.